In the NSX-T environment, there were scenarios where it's required to bring down the manager node instances off from the cluster due to several abnormal reasons.

Scenarios like if there were some issues during the upgrade of the manager node instance or having any abnormal circumstances where is node unable to recover from NSX-T Manager UI.

To recover/replace the node from the manager cluster its require to attempt with the manual process.

Let's discuss the manual path to recover/Replace a Manager Node in the Cluster.

1) Login to NSX-T manager using CLI

2) Use command 'get cluster status'

This command will list all the NSX-T manager/controllers nodes into the cluster.

Find the UUID of the existing node and Cluster to identify the node which requires recover/replace.

3) Now that we have identifying the manager node ID from the above command, its time to detect the node from the cluster.

Using detach node command "node id" will remove the node from the cluster.

This process will delete that specific node completely from the cluster and NSX-T enviornment.

Now once you deploy a new NSX-T manager node, its require to add the node into the cluster.

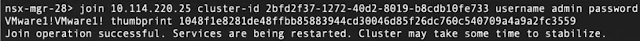

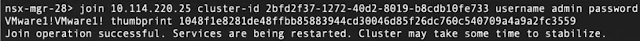

4) To add the node manually its require to know the API thumbprint certificate of the cluster to associate the node with the cluster

Using get certificate api thumbprint will get the certificate api.

5) Now, once we get the API thumbprint certificate, we can add the node using the node ID with API thumbprint certificate.

This will successfully add the new node into the NSX-T Cluster in full motion.

6) Here, we need to identify which manager node is the orchestrator node within the cluster.

It is a self-contained web application that

orchestrates the upgrade process of hosts, NSX Controller cluster, and Management

plane.

Users can check which node is orchestrator node by running CLI "get service install-upgrade". The IP of the orchestrator node will be shown in the "Enabled-on" output.

"set repository-ip' will make a manager node the orchestrator node. It is needed if the node on which install-upgrade server is enabled (orchestrator node) is being detached from MP Cluster.

Note: Changing the IP address of the Manager Node needs to follow the same procedure.

This conclude the process to add the NSX-T manager/controller node into the cluster using the manual method.

If you like the contents of this article then please share it further on the social platforms. :)

Comments

Post a Comment